What is a HTTP 404 Error?

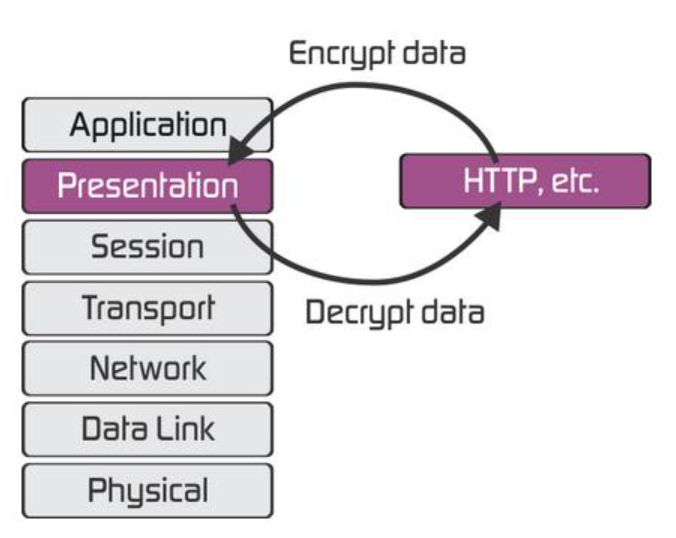

Hypertext Transport Protocol (HTTP) is the layer that webpage data is transmitted to your web browser that renders it on your computer screen.

On the OSI model, HTTP is on the Presentation Layer.

HTTP also works on the client server model. The client, your web browser, requests a file from a web server via a URL. This page has a URL that brought you here.

If logging is enabled on your web server you will have a record of all HTTP requests to review. Try here is you need help configuring logging on IIS Server.

If a clients HTTP request was successful, the server returns the data and a “200” code that means OK.

If a clients HTTP request fails because the file you requested is missing, the server sends back a HTTP 404 error meaning “Not Found”.

Hackers, Recon and the HTTP 404 Error!

404 NOT FOUND pages in your web server logs are often the earliest signs of surveillance, foot printing or reconnaissance.

I guarantee you that unless your attacker has inside knowledge of the target, any recon attempts using HTTP will most likely be generating some 404 errors. You can’t avoid it as it is a byproduct of the enumeration process.

Video: Enumeration by HackerSploit

Early Recon Detection

Early recon detection along with early blocking actions can be a game changer in the never ending game of digital tag where you really don’t want to be it.

If you you’re doing this for a living and take the topic more seriously, I would use the word, countersurveillance, to describe what we need. Who is coming at us, where are they coming from and how can we mitigate risk?

Log Files & Free Analysis Tools

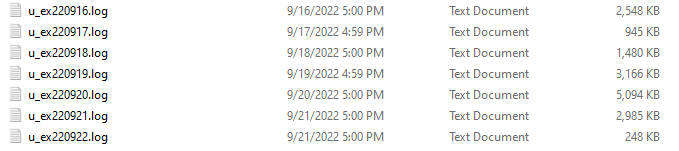

For this example I’m going to focus on Microsoft IIS web server logs as that is what I have handy.

Notice the naming of the log files in the screenshot below. They contain the start date of each log. Example: u_ex220916.log.

In this example, the logs are created, one per day. The server can be configured to make the logs run for a week or even for a month. I prefer smaller files.

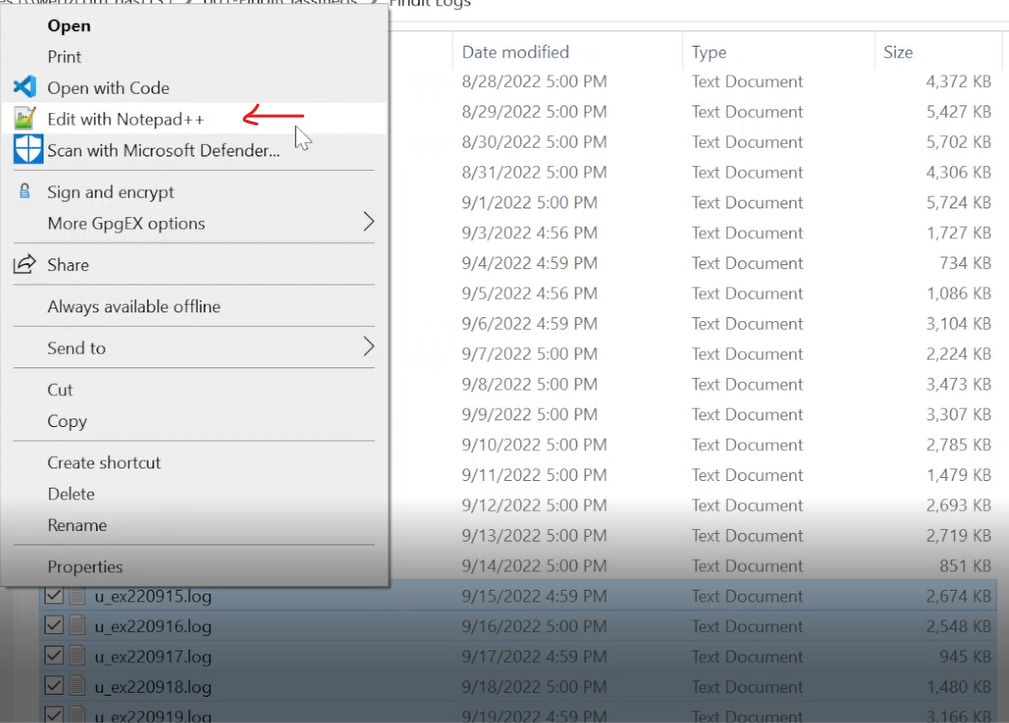

NotePad++ for Reviewing Log Files

Most of the time I just use Notepad++ to review logs file on a daily or weekly basis. Notepad++ does a great job of searching all open files.

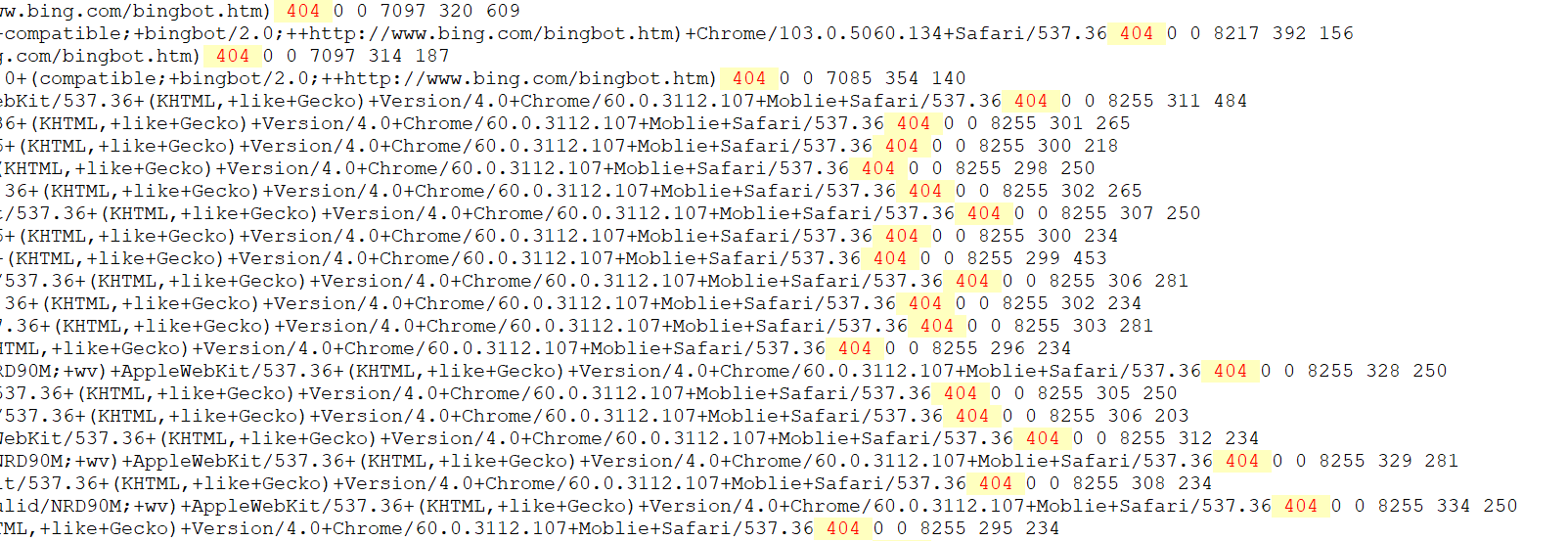

All I do is run a search across all open files for ” 404 “. Make sure to leave a space character on each side for this to work correctly.

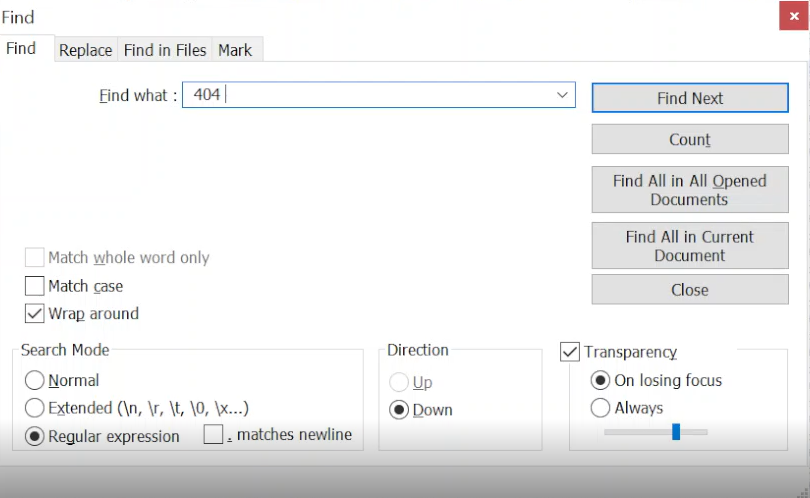

CTRL+F to Find our 404’s

With Notepad++ opening all of our files, we’ll user CTRL+F to open the Find dialog window. Type in ” 404 ”

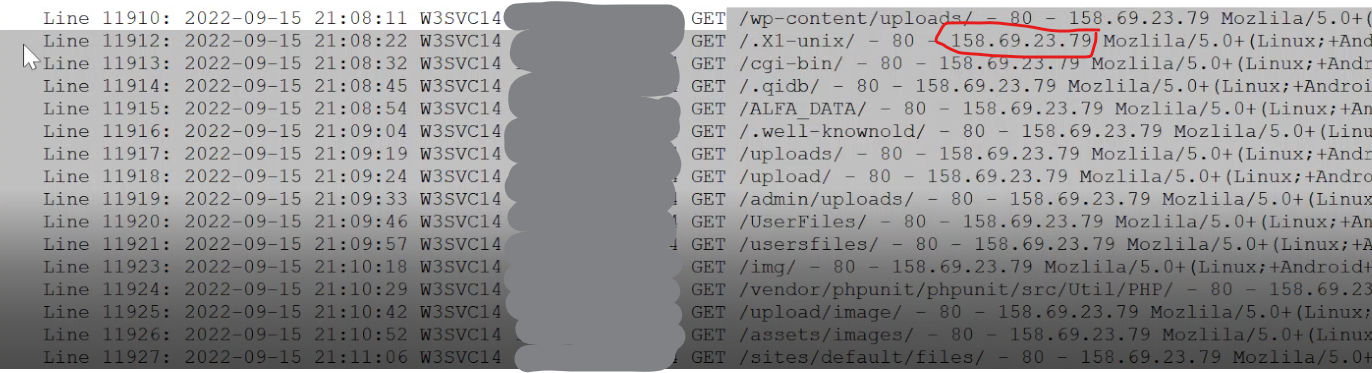

Actual Recon 404 Errors from IIS Log

Where are the 404s?

Most of the log entries are very long and difficult to display online.

This 2nd screenshot shows where the actual 404 error is on each line in the server log. It is towards the end of each line.

Log Parser 2.2

Log Parser 2.2 is what I use for parsing larger amounts of log IIS log files. Log Parser lets you use SQL like commands to query the data which can be output to CSV files. Download Log Parser 2.2 here.

I’ll be coming back to add some log parser query examples as soon as I can get them from my work notes.

C:\temp\logs\logparser "select * from u_ex180131.log" -o:datagridAfter running this command log parser will open with your log data. You can copy it out to excel where you can do your analysis.

Also, I came across this page for a freeware OLEDB extension that says you can use it to query any OLEDB datasource which log parser doesn’t support natively.

http://gluegood.blogspot.com/2008/04/freeware-logparseroledb.html

Video: How to Use Log Parser 2.2

Countersurveillance and SiteSpy

Now that I’ve covered how to find recon attempts in a log file using Notepad++ and Log Parser, I’ll share my personal “Ace in the hole”, SiteSpy.

SiteSpy is an application monitor I originally developed back in 2002 by accident when I was teaching web programming at Modesto Institute of Technology (MIT).

SiteSpy takes advantage of some existing Microsoft technologies, by running a monitor in the same memory space as the web application.

SiteSpy sniffs out the session connections in real-time and displays them in a webpage that is refreshed frequently. Items of interest bubble up to the top for review. Bot traffic can be filtered to increase recon sensitivity. It works well but is not 100% effective.

SiteSpy Recon Detection Example

This is a probing event I caught was using the IP, bypassing DNS while probing for non-existent file called “/admin/config.php” all the way from Ramallah Palestine.

SiteSpy showed me a hit on the 404 page after they got the initial 404 error. Otherwise, I would not had seen it until much later. I was able to update the firewall within minutes, denying them time and space to do more recon or an actual attack from that IP range for now.

In Conclusion

You don’t need a lot of fancy Cybersecurity tools to do a little blue teaming. Just Notepad++ and the desire to learn.

The most important thing when reviewing a web application’s log files is to first know the application and all possible URL patterns.

Once you have established normal patterns, you can more easily find things that seem out of place.

Hope this helps someone!

Regards,

Cyber Abyss